Prezentácia nášho showroomu a realizácie

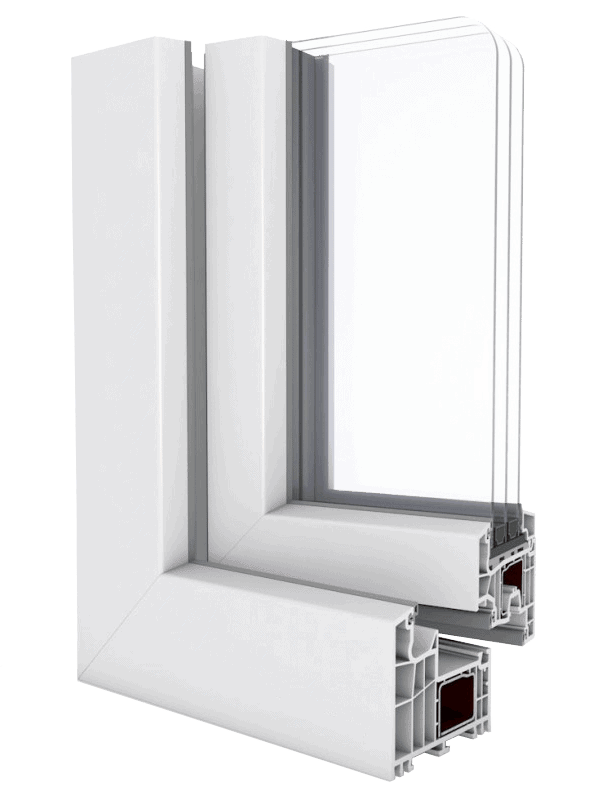

Vo firme AZ house sa zaoberáme predajom a montážou plastových okien, dverí, garážových brán a taktiež doplnkových produktov akými sú parapety, siete proti hmyzu a rôzne druhy interiérovej a exteriérovej tieniacej techniky. Našim zákazníkom ponúkame najkvalitnejšie plastové okná a dvere značky Salamander, špičkové garážové brány Hörmann, štýlové interiérové dvere DRE a cenovo dostupné bezpečnostné dvere Securido

Kladieme veľký dôraz na spokojnosť našich zákazníkov, preto sa u nás vo firme AZ house vždy stretnete s profesionálnym prístupom a poradenstvom pri výbere, prijateľnými cenami a krátkymi dodacími termínmi. Bezproblémovú montáž vám zabezpečia naši skúsení a certifikovaní montéri, s ktorých prácou budete určite spokojní. Samozrejmosťou je aj záručný a pozáručný servis našich produktov.

Firmu odporúčam. Montáž plastových okien prebiehala už na zateplenej fasáde, okná orientované do rušnej ulice. Montáž komplikovali pôvodné okná, ktoré mali zložitú konštrukciu a okoloidúci ľudia. Napriek zložitosti montáže a neočakávaných okolností v priebehu montáže si chlapci zachovali profesionalitu, dobrú náladu, precíznosť.

Maximálna spokojnosť, kvalitná práca, ak by som objednávala, určite opäť u tejto firmy, možnosť zľavy, kvalitné okná, šikovní rýchli chlapci. Vrelo odporúčam.

Šikovní odborní a ústretoví chalani.

Pekny showroom, brany, okna, parapety. Pristup na urovni

So službami som spokojný, kvalitná práca za rozumnú cenu!

Som spokojný s robotou, s produktom aj prístupom, možem len odporučit.

Úplna spokojnosť. Odporúčam každemu. Profesionálny prístup a jednanie.

Bol som spokojný s montážou aj s prístupom.Pekná práca. Dakujem. Odporúčam...

Ďakujeme veľmi pekne za vybavenie objednávky. Všetko prebehlo v poriadku i keď chlapi mali trošku problém dostať okná do výťahu. Museli to nosiť po schodoch. Samotne montovanie prebehlo z môjho pohľadu veľmi dobre a rýchlo. Výborná práca môžem povedať. Okná sú skvelé. Vonkajšie žalúzie fungujú. Ďakujeme ešte raz za spoluprácu.

S firmou AZ house Jozef Vindiš sme boli nad mieru spokojní.Okná na 100% , rýchla, čistá montáž... Určite odporúčame.

Od firmy AZ house-Jozef Vindiš mám už 2 realizované zákazky a vždy som bol spokojný. Prácu vždy odviedli na 100 % a samozrejmosťou je servis po odovzdaní zákazky. Vrelo odporúčam!

Som veľmi spokojný,,, to čo sľúbili, to aj splnili! Korektný prístup a snaha vyhovieť požiadavkám zákazníka! Ak budem potrebovať znova si objednávku urobím vo firme AZ house-Jozef Vindiš. Vrelo odporúčam!

Odporúčam.

Rýchlosť dodania a aj montáž. Super cena.

Odporúčam

Odporúčam

Od objednávky až po samotnú realizáciu ústretový a profesionálny prístup. Sme spokojní a služby AZ house určite ešte využijeme.

Sme veľmi spokojný s výberom firmy. Ďakujeme 🙂

Chcem sa poďakovať za perfektne odvedenú prácu s oknami i s balkónom. Som nad mieru spokojná.Máte skvelých chlapcov, poďakujte im, prosím, tiež ešte raz v mojom mene.

Firma AZ house nám dodala plastové okná, prístup bol skvelý, cena najlepšia (mali sme aj iné ponuky). Práca bola vykonaná rýchlo, kvalitne a v termíne. Maximálna spokojnosť. Počítame so spoluprácou aj do budúcna.

Z mojej strany úplná spokojnosť. Prácu spravili rýchlo a na 100%, majstri šikovný, komunikácia výborná, cena vynikajúca a taktiež kvalita na najvyššej úrovni. Môžem odporučiť a počítam so spoluprácou do budúcna!!!

S prácou firmy AZ house - Jozef Vindiš som spokojná , termín dodržaný , prístup pracovníkov výborný , kvalita vykonanej práce výborná . Počítam ešte so spoluprácou do budúcna 🙂

S prácou firmy AZ house Jozef Vindiš sme boli spokojní. Kvalita, termíny - všetko bolo dodržané.

Garážová brána a okná boli dodané podľa dohody. Cena a kvalita výborná. Pri bráne ma príjemne prekvapil jej tichý chod. Oná sú tiež super. Odporúčam.

Firma AZ House nám dávala plastové okná do 3-izb. bytu v Novom Meste nad Váhom. S prácou, oknami a aj s prístupom pána Vindiša sme spokojní. Murovali nám aj stenu na balkónovom okne a super. Všetkým ktorý chcú výmenu okien, tak vrelo odporúčam firmu AZ house.

Firmu AZ house - Jozef Vindiš môžem jednoznačne len odporučiť, komunikácia, ochota, dodanie podľa dohodnutého termínu, všetko na 100%, majstri prišli namontovali, nechali nás všetko skontrolovať a uistili sa č sme zo všetkým spokojný a až potom odišli. Absolútna spokojnosť.

Komunikácia s p. Vindišom bola na vysokej úrovni, dodanie okien bolo presne podľa dohody, kvalita výborná a cena najlepšia (mal som viacej konkurenčných ponúk okrem tejto stránky)... Firmu AZ house - Jozef Vindiš môžem smelo odporučiť všetkým záujemcom...Ešte raz, maximálna spokojnosť !!!

Výborná komunikácia a prístup, dodací čas v norme podľa dohody, skvelá práca pri osadení okien. Firmu AZ house - Jozef Vindiš vrele doporučujem.

S odvedenou prácou firmy AZ house - Jozef Vindiš sme boli veľmi spokojní. Prácu odviedli na profesionálnej úrovni. Firmu môžem doporučiť všetkým, ktorí si potrpia na dobre odvedenú prácu.

Firmu AZ house hodnotím ako firmu s profesionálnou kvalitou odvedenej práce, s ľudským prístupom, kvalitné a veľmi pekné okna i dvere, výborná a ústretová komunikácia majiteľa firmy a 100% spokojnosť z našej strany niet absolútne čo vytknúť. Ešte by som rada poďakovala za ochotu a prácu navyše s ktorou ste nám pomohli. Ďakujeme a prajeme veľa úspechov v podnikaní...

S firmou AZ house máme dobrá skúsenosť, cena v ponuke bola dodržaná, dodanie okien bolo podľa dohodnutého termínu, pani majstri boli rýchli avšak prácu vykonali svedomito a som spokojná s ich odvedenou prácou, prišli už ráno a na večer už sme sa tešili z nových okien. Komunikácia s pánom Vindišom bola tiež bez problémov, ľahko sa nám prispôsobil s dátumom aj časom. Vrele odporúčam ako firmu s dobrým prístupom k zákazníkovi ! 🙂

Spokojnosť ...

Okná nám namontovali bezchybne, a boli sme spokojní. Ďakujeme

Odporúčam.

Absolútna spokojnosť. Príjemne jednanie, dodržanie všetkých bodov dohody od zamerania, dodávku, montáž až po cenu. ODPORÚČAM!

Komunikácia bola bez zdržania, príjemné jednanie, dohodnuté termíny dodržané, kvalita práce i okien veľmi dobrá, príjemný prístup majiteľa i pracovníkov. Ďakujem.

Príjemné jednanie, podmienky splnené, kvalitné a rýchle prevedenie. Zatiaľ je to všetko. Poprípade sa ozvem neskôr doplniť info.

Komunikácia s dodávateľom výborná, na bližšiu špecifikáciu reflektovali okamžite, výborné odborné poradenstvo. Promptne prispôsobitelný časovej kríze na strane zákaznika rýchlou realizáciou v čase vyhovujúcom klientovi. Pracovníci boli ochotní, odborne znalí, poradili nielen vo veciach týkajúcich sa priamo ich vykonávanej práce, rovnako ako aj prácu "naviac" zrealizovali. 110% SPOKOJNOSŤ, DOPORUČUJEM.